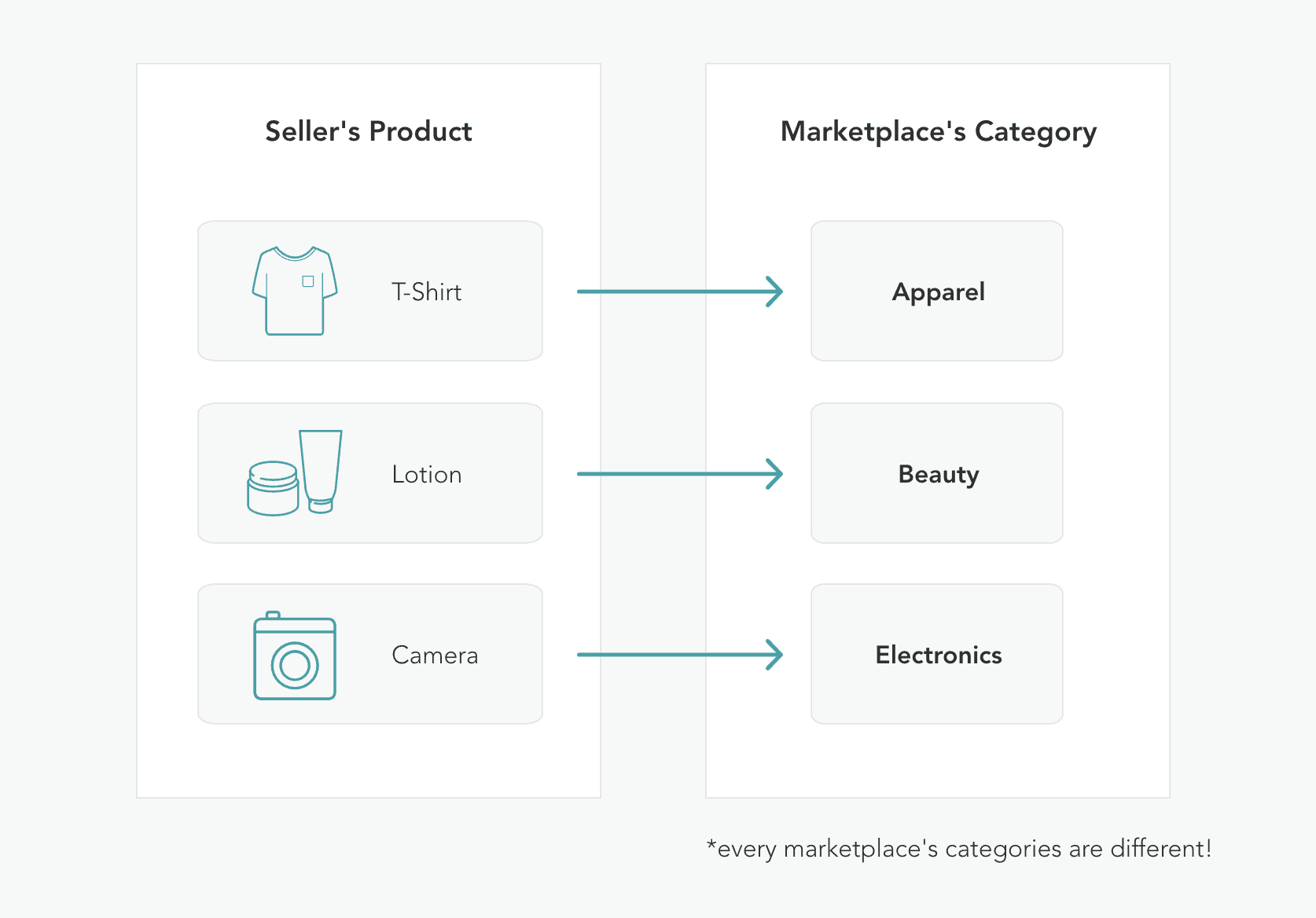

*repeat for EVERY marketplace!*

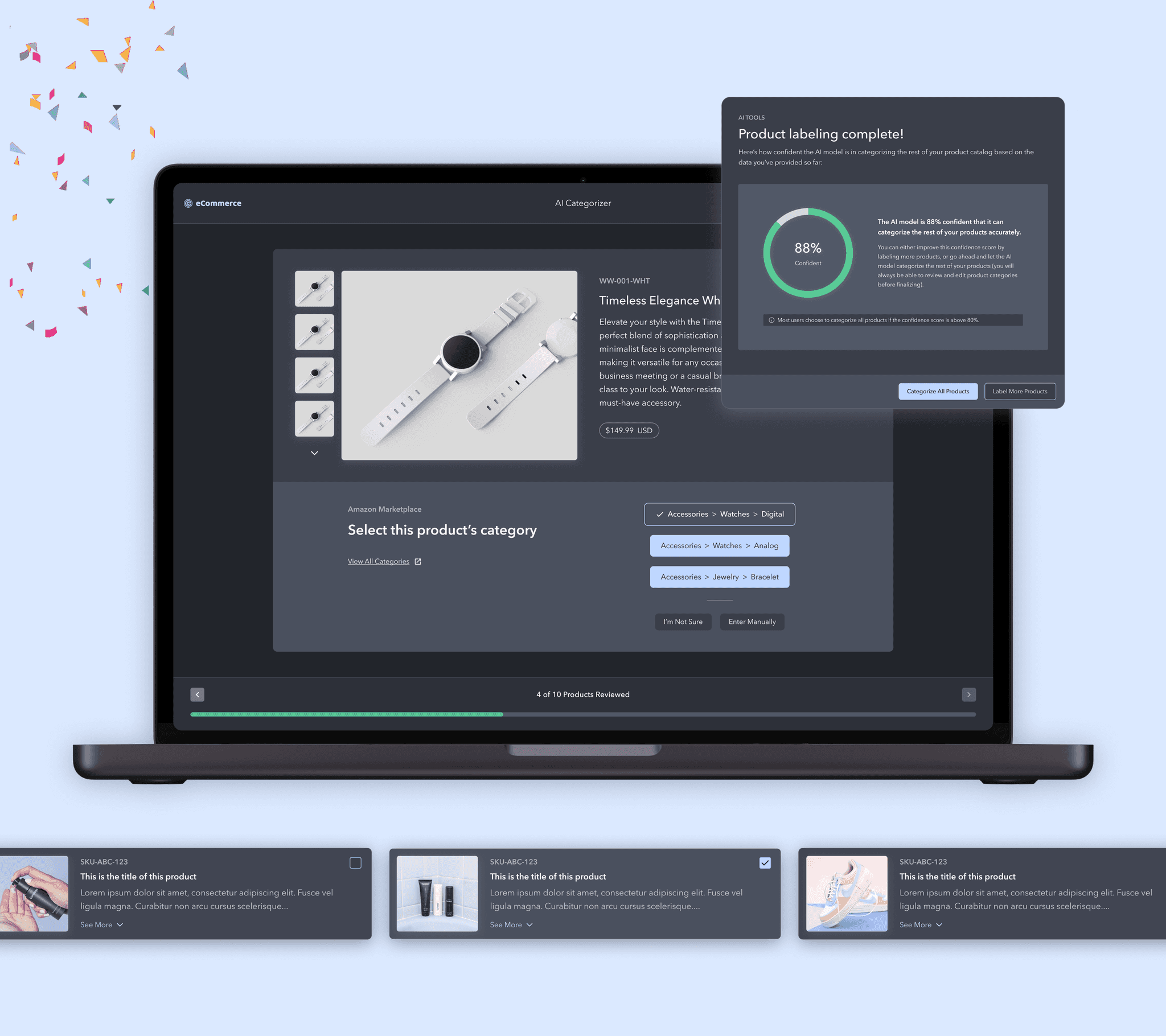

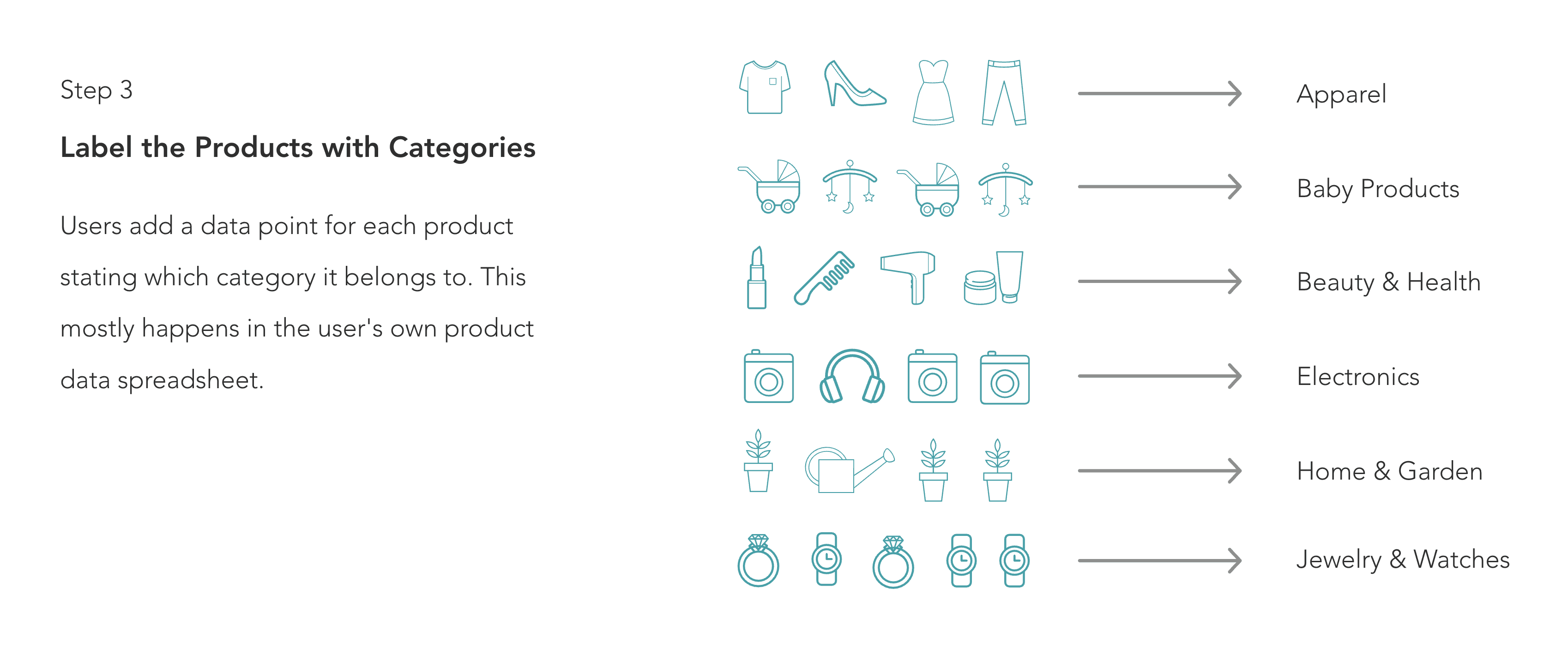

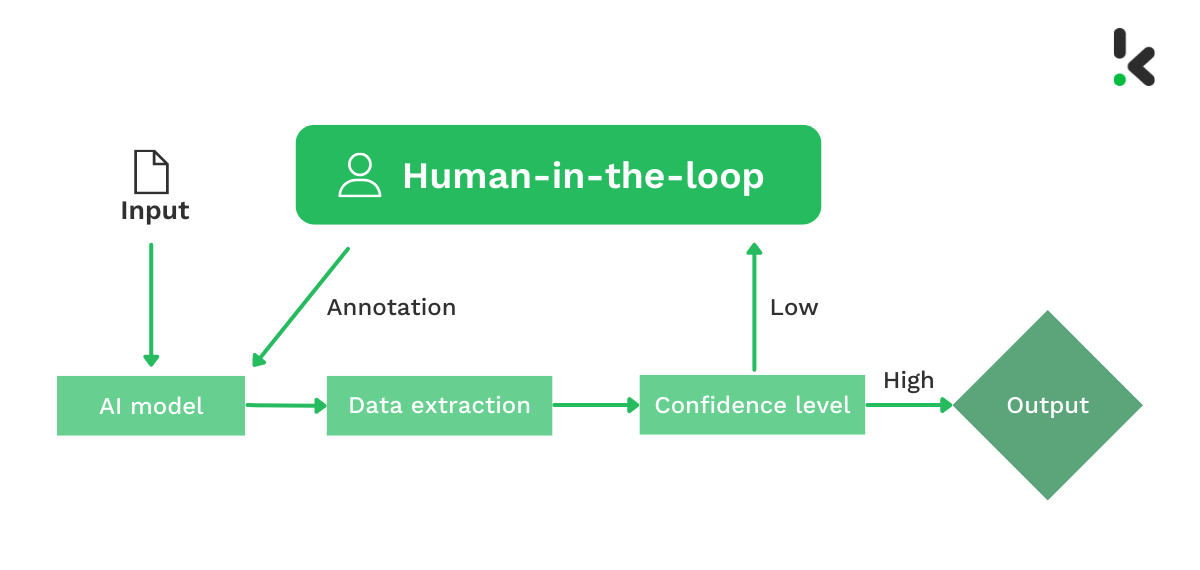

The AI model will analyze the input (the user's product data), categorize products according to the marketplace's requirements, and assign a confidence level to each category mapping.

If the model is not confident in a product's category mapping, it will surface that product and the user will manually select the correct category for the product. The model will learn from this input and apply the knowledge to other similar products.

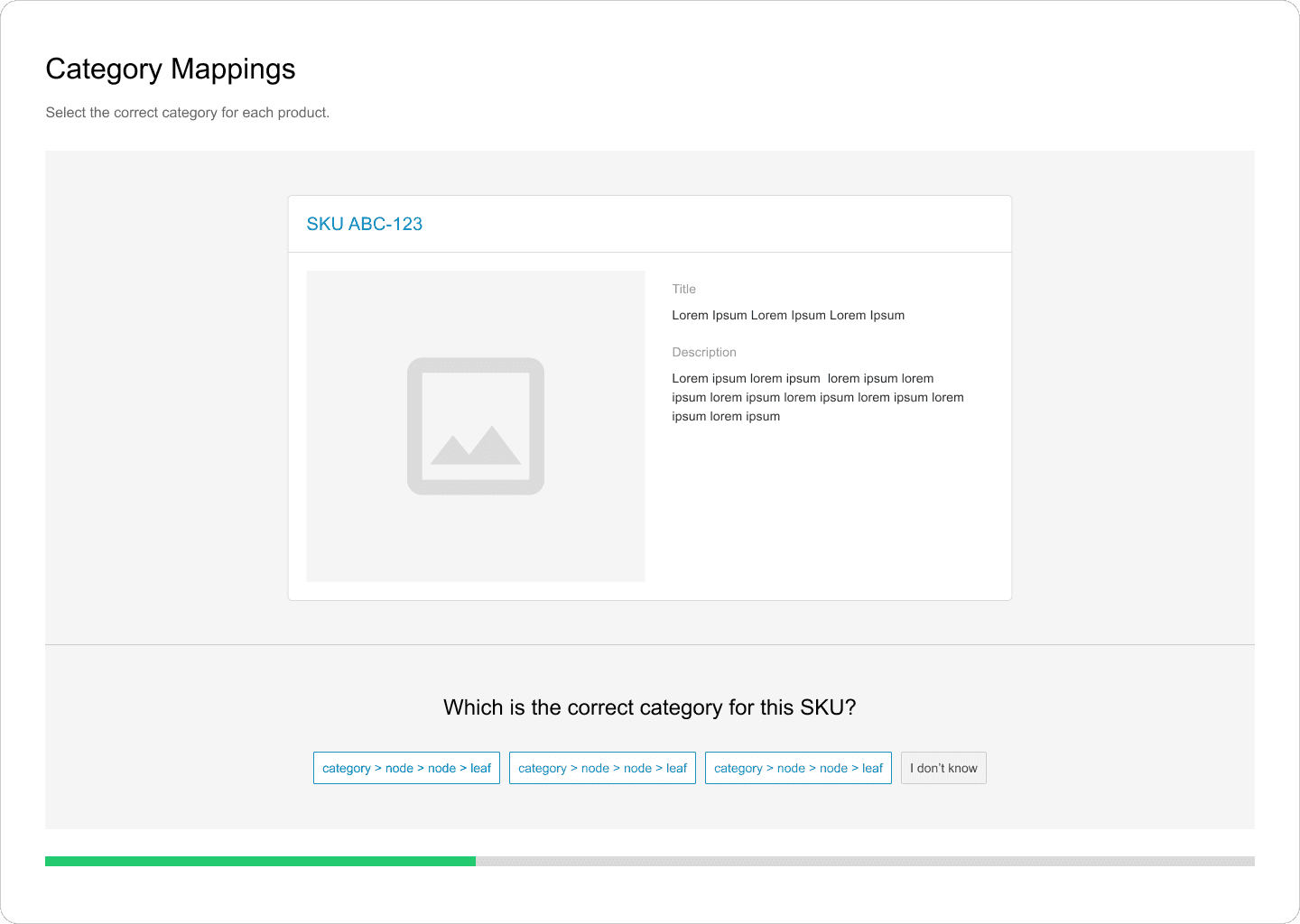

Users will annotate a sample set of products (between 20 and 100), depending on the ML model's confidence

Users must have enough information on the product to be able to identify its category (image, title, description, others?)

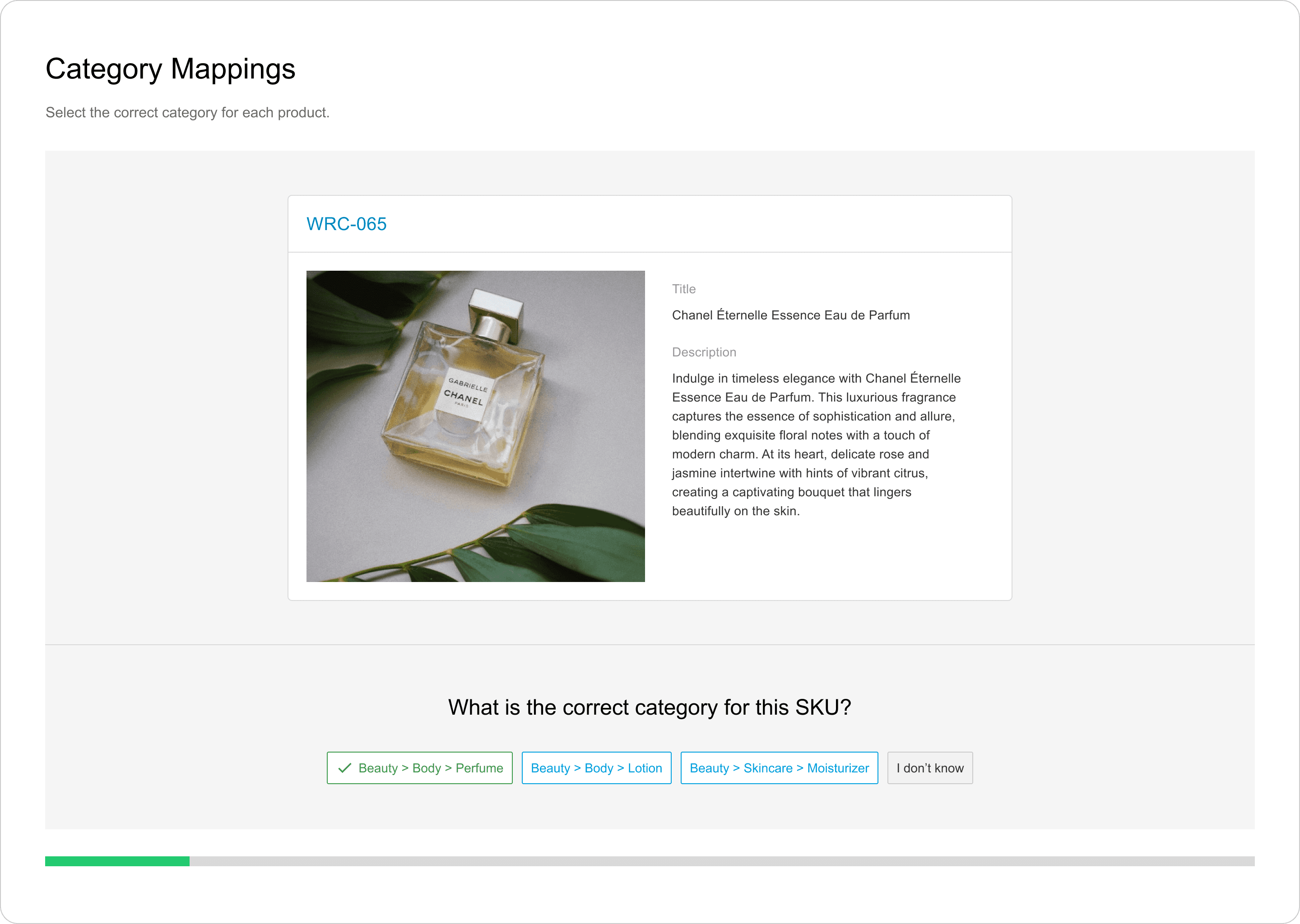

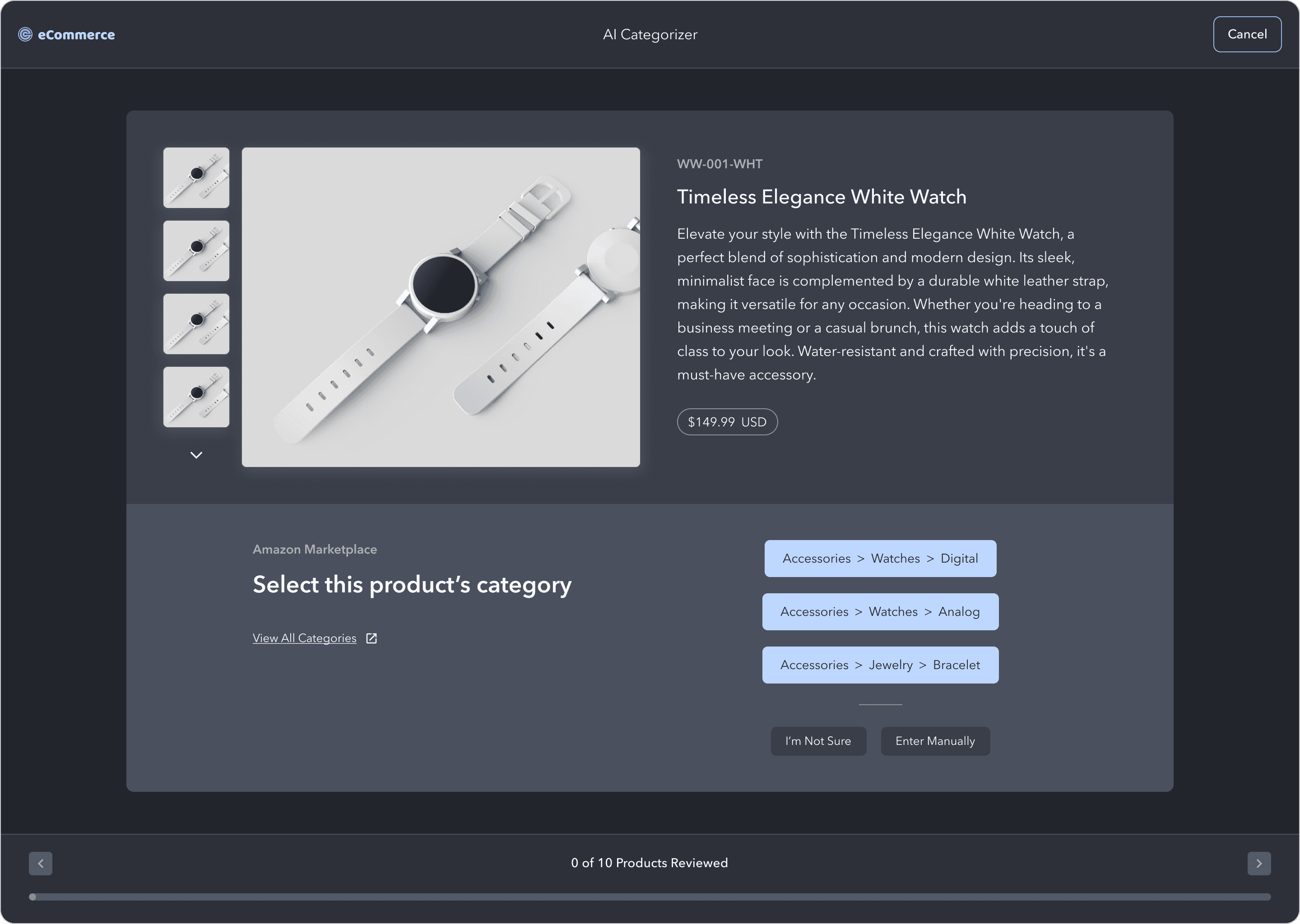

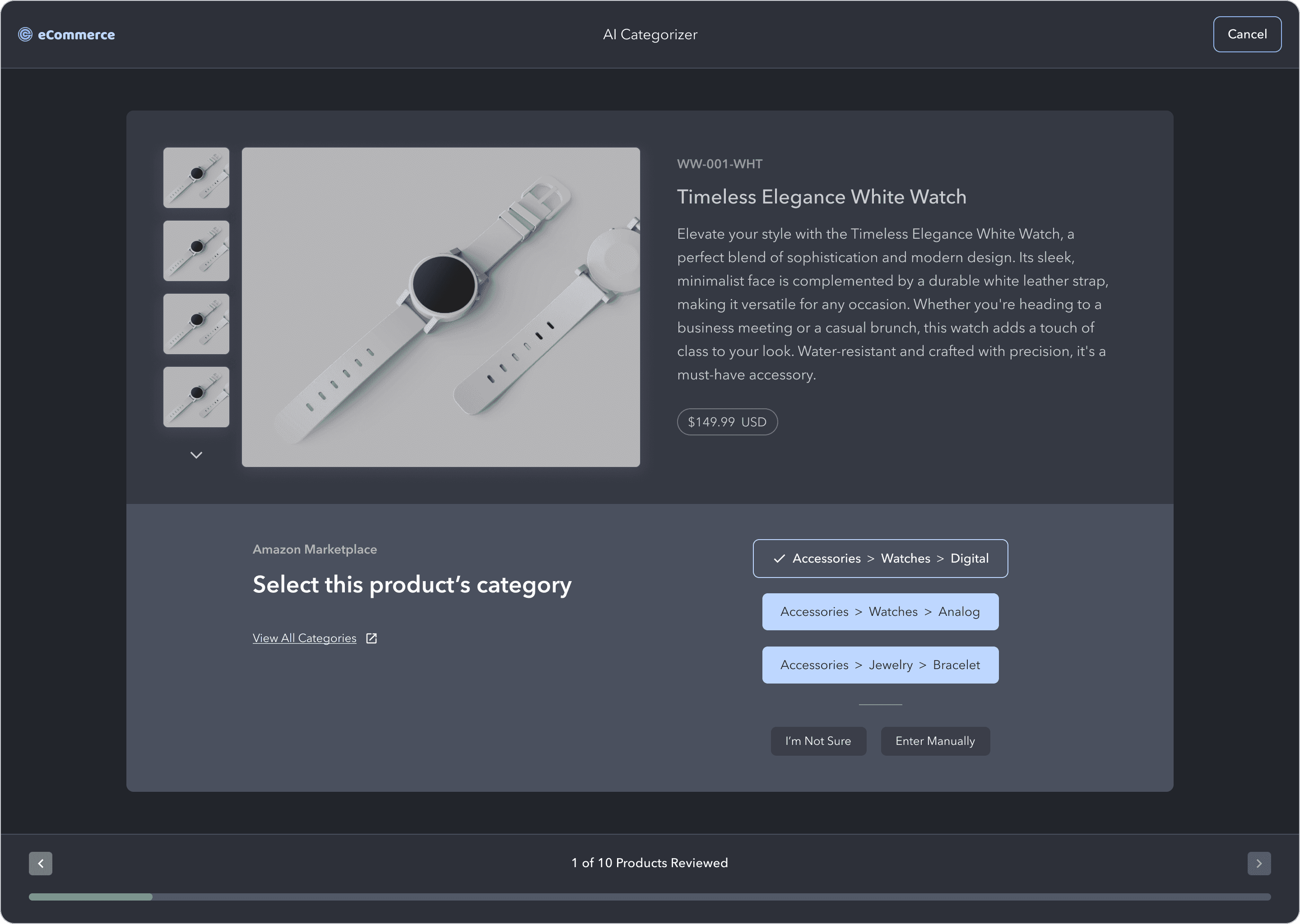

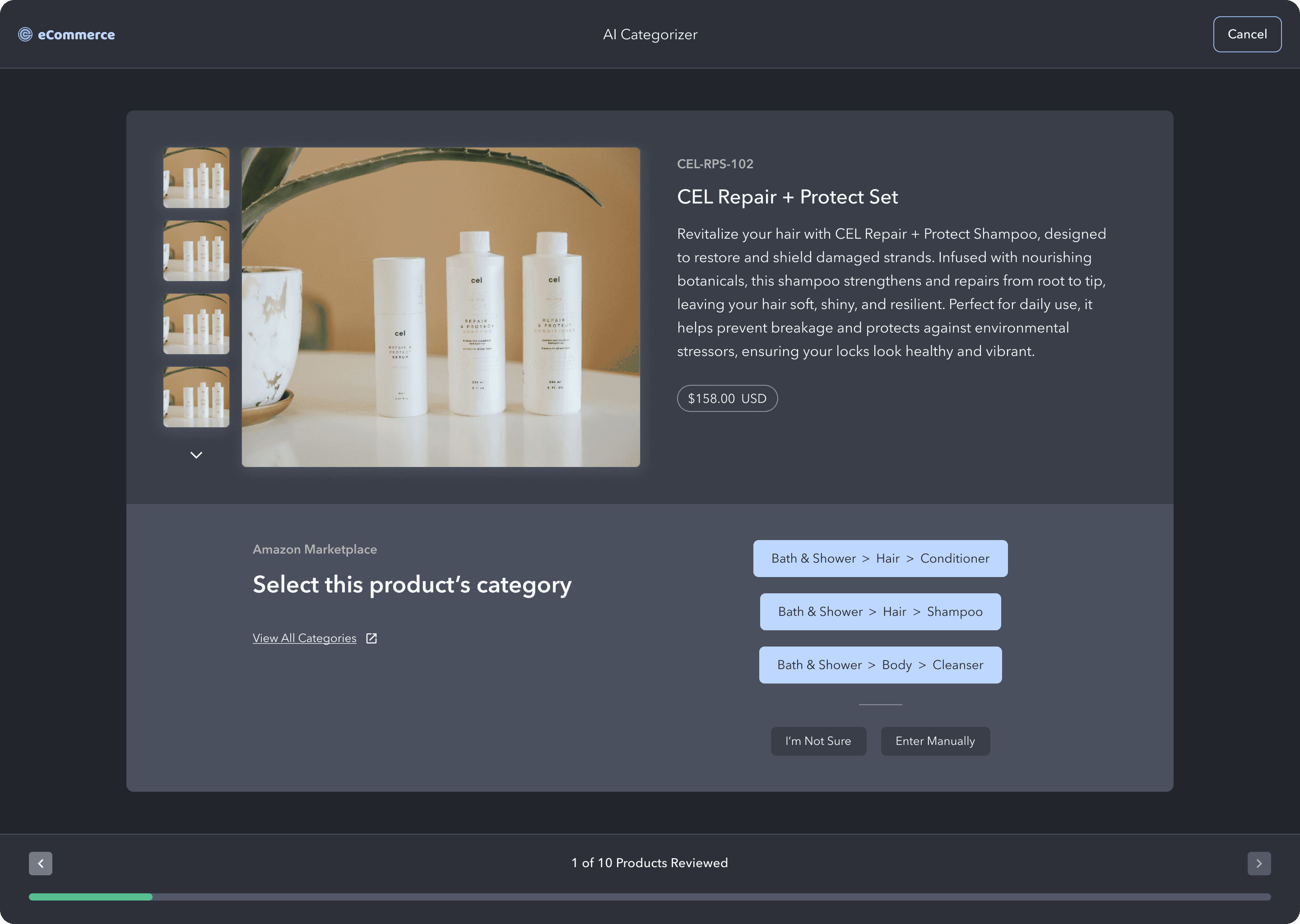

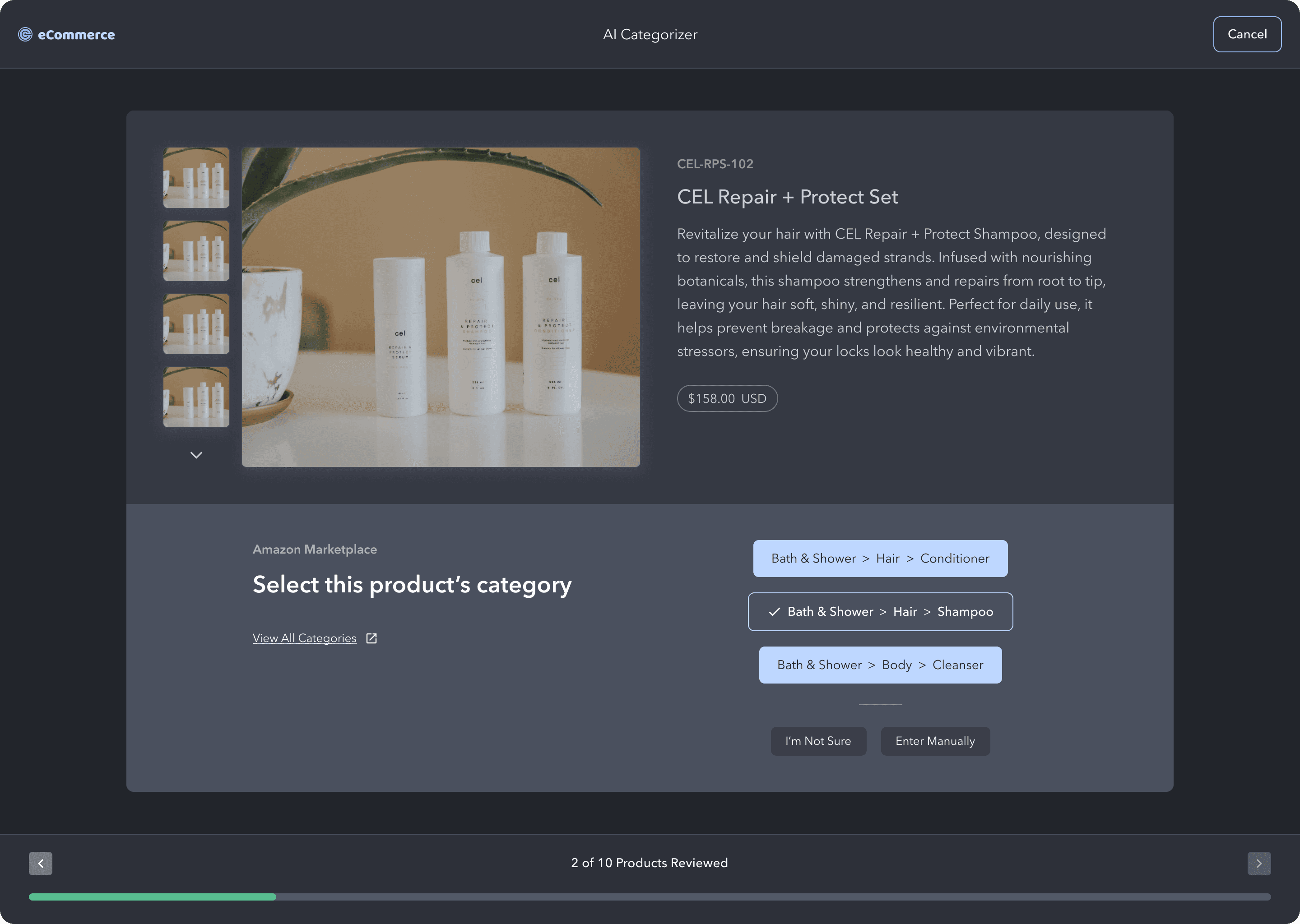

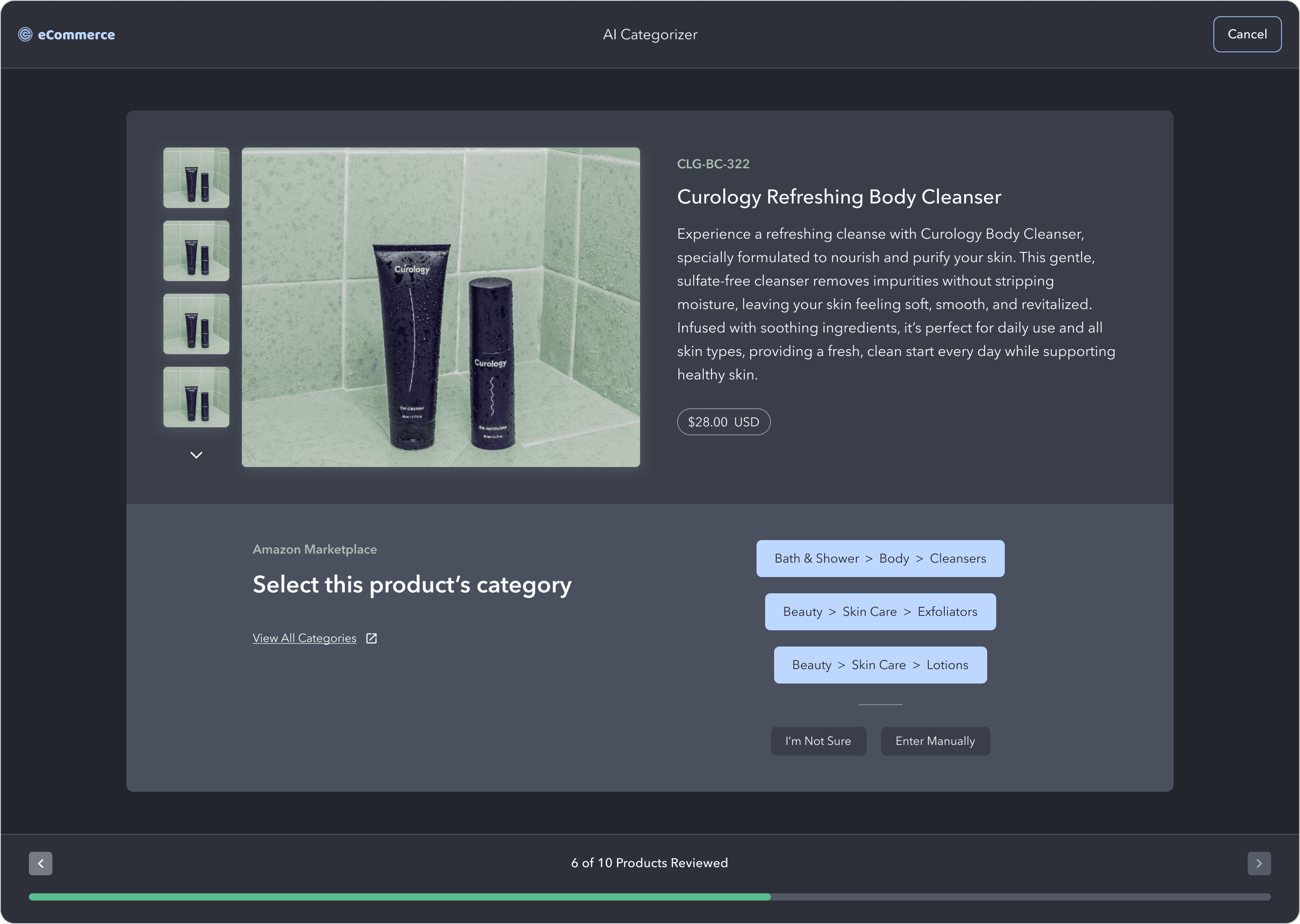

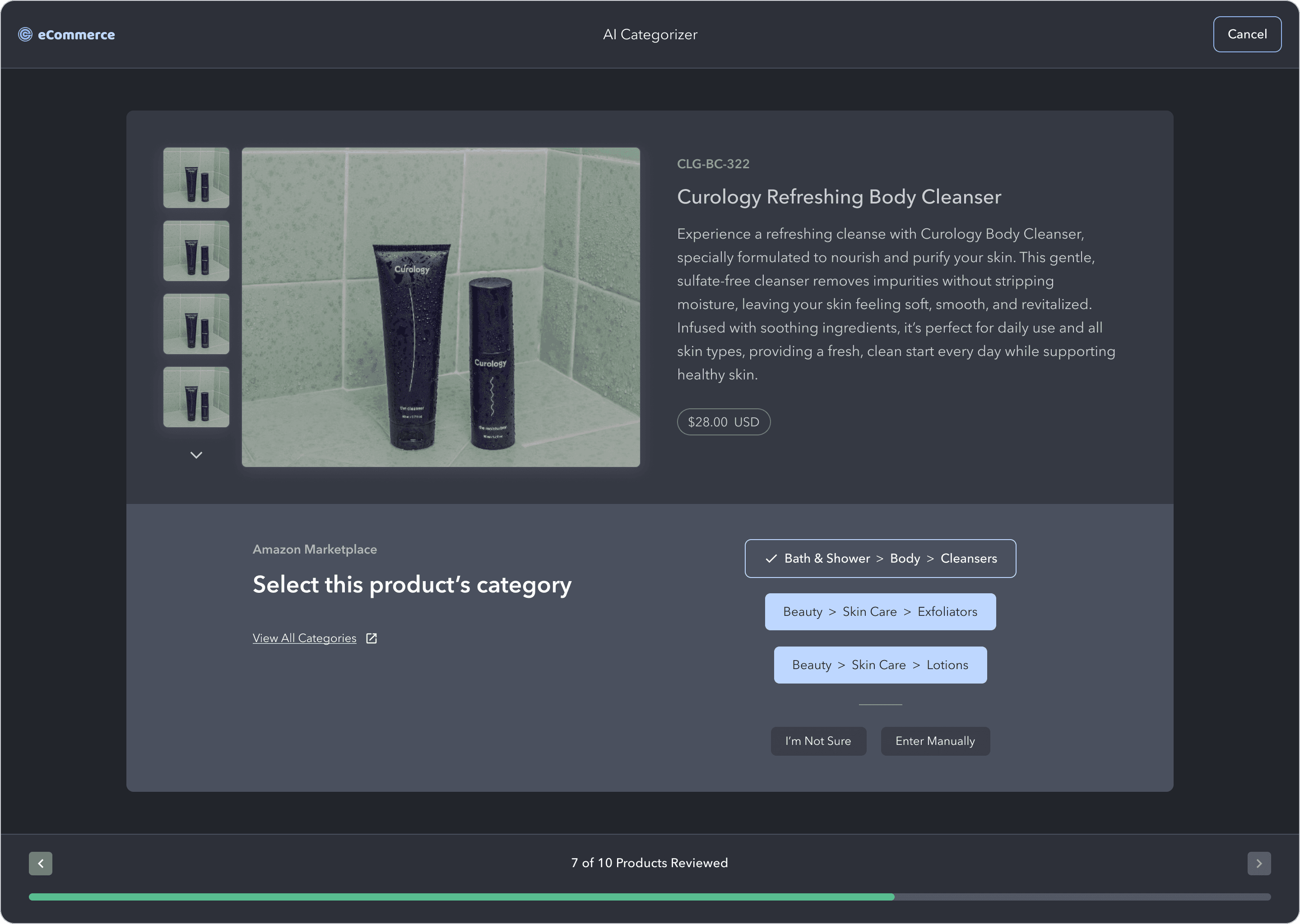

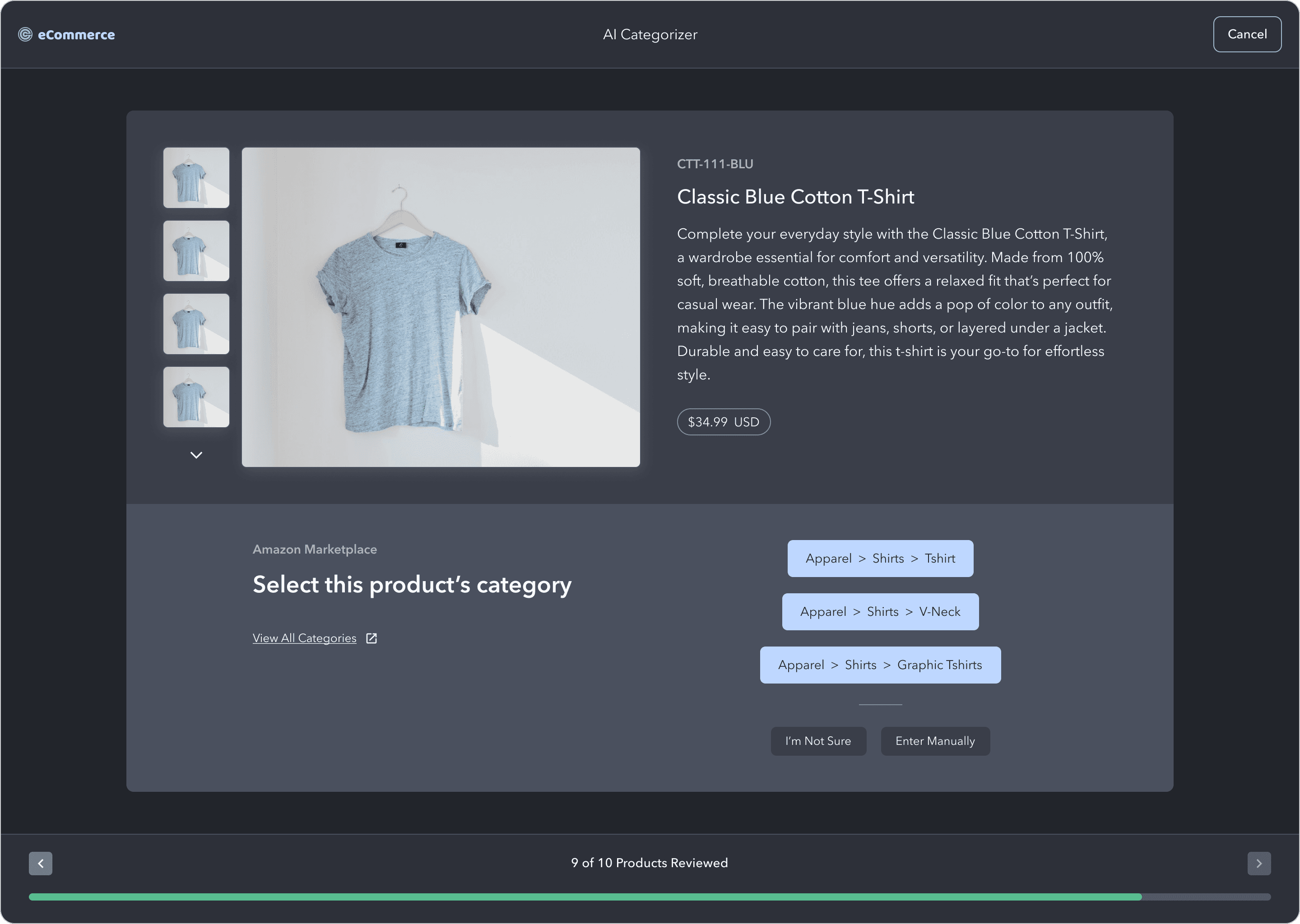

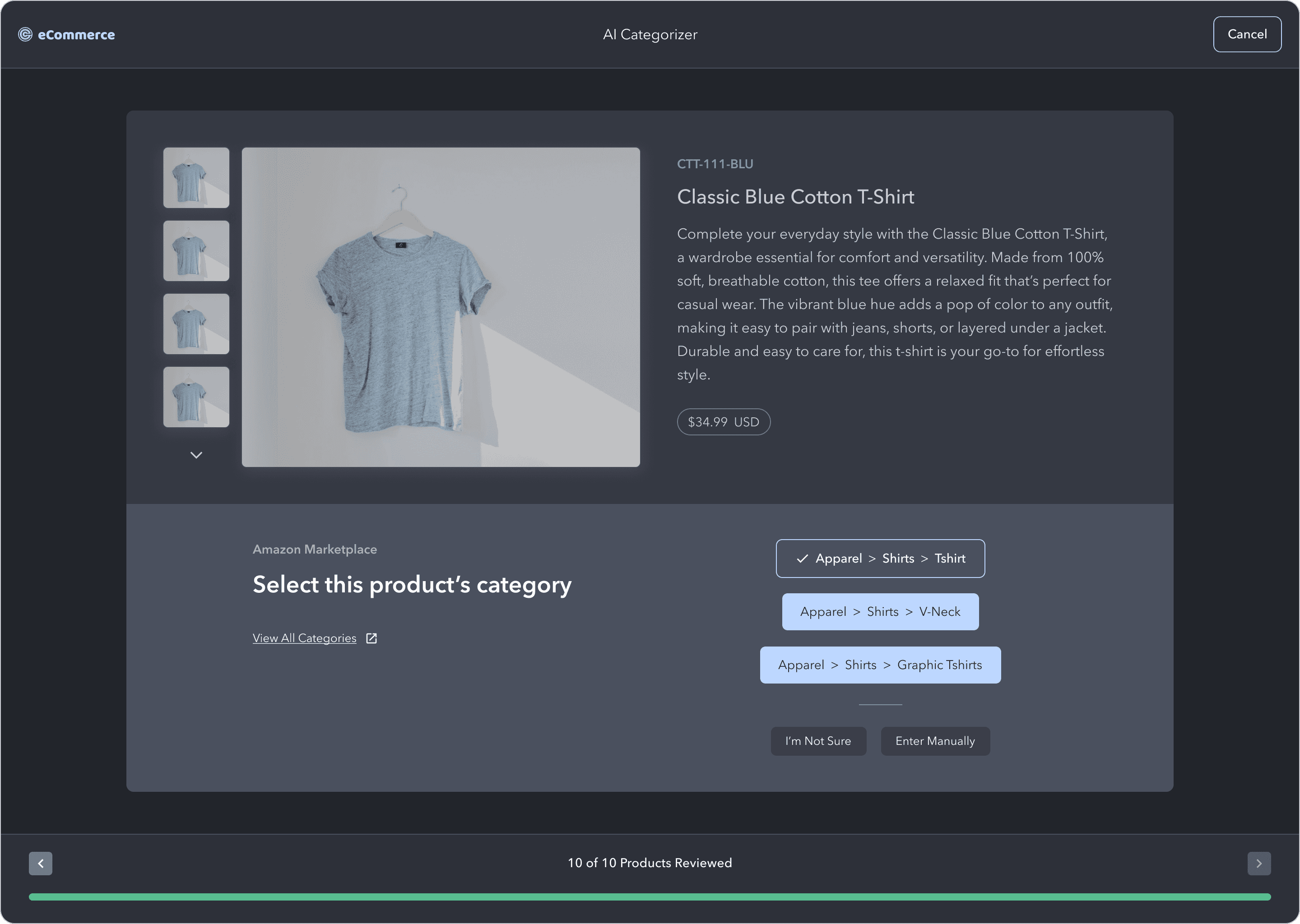

Users will be presented with 3 category predictions per product

Categories will be at the lowest subcategory level

Users can select an "I don't know" option to skip the product

Users must select a category for each product to finish the process

Users should have some sort of progress indicator

After users have reviewed the sample set of products, they can review and edit the final output

The experience should be fun and delightful, perhaps including gamification elements

Speed

Users can review products as quickly and easily as possible.

Accuracy

Users have all of the product info they need to select the correct category.

Confidence

Users should gain confidence in our product and in our AI / ML capabilities.

Easy Final Review

Users can easily review + edit the final output of categories for ALL of their products.

Delight

Users should enjoy this experience - it should feel easy and fun!

CONCEPT A

Users select the category for products one by one.

Pros

The product card is easily digestible

Plenty of room to provide as much info on the SKU as needed

CTA is front and center

Cons

How does the user know when they're done? Progress bar helps but is vague

Will user be able to review lots of products quickly if it's just one at a time?

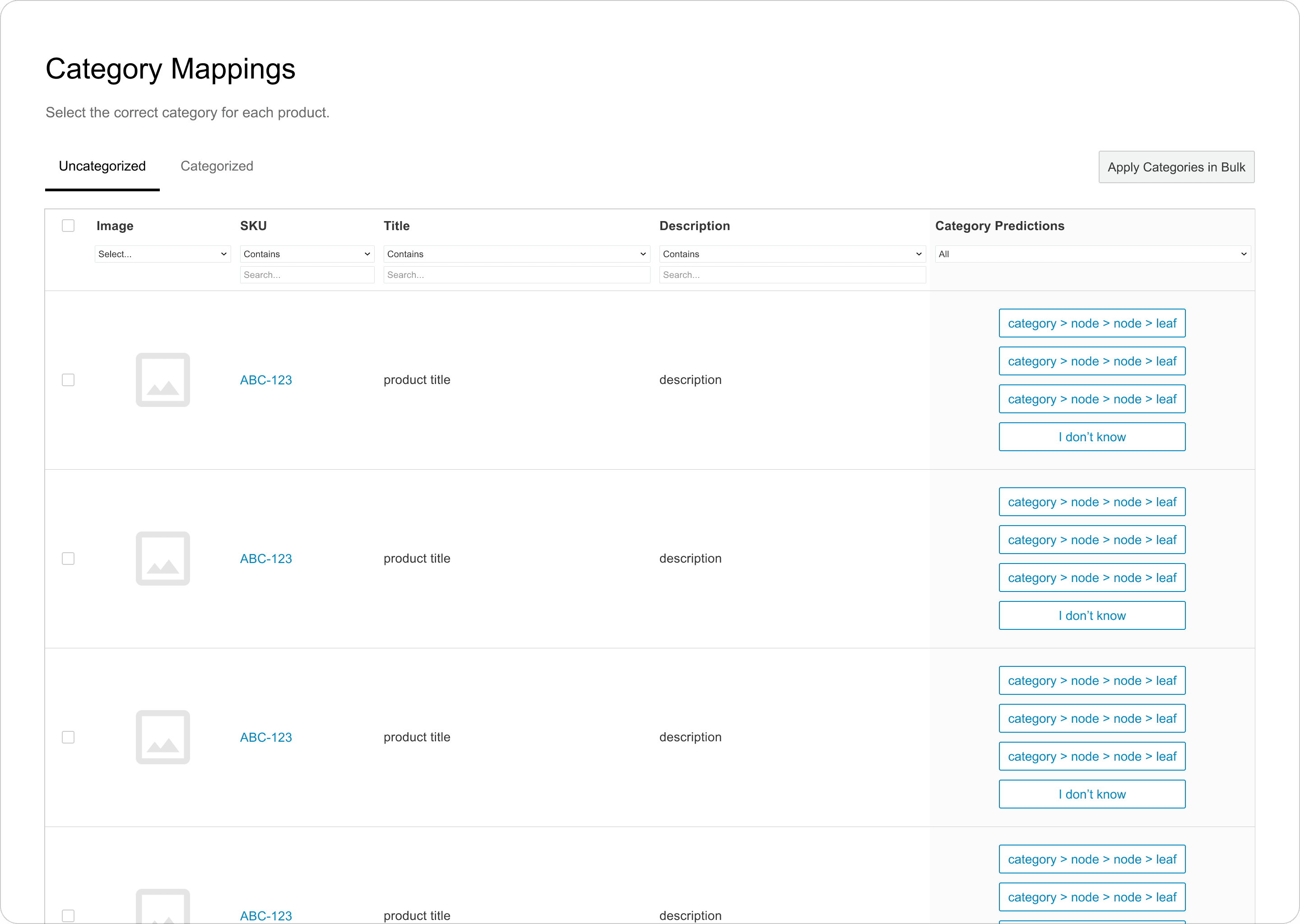

CONCEPT B

Users have a list of all products to categorize.

Pros

Users can filter products to focus on particular areas

Bulk action option so users can categorize multiple products at once

This table pattern is common in our app, it would be familiar to users

Cons

Not much direction for the user

Smaller product image size

Ultimately, the team wasn't sure which option would meet our goal of users labeling products as fast as possible. There's an easy way to figure that out… user testing! 🙌

Participants

6

Total users of varying experience levels

A/B Testing Strategy

In a Figma prototype, users will annotate a sample set of 20 products from their own catalog.

Users will complete this exercise on both Concept A (one at a time) and Concept B (list view).

We will measure how long it takes each user to annotate the sample set with each concept.

Goal

Determine which user experience produces the fastest annotation result.

Concept A

Testing Notes

Users were able to start annotating right away - the cta was clear

Users thought this was actually fun - like a video game!

Users weren't sure what to expect when this process was finished

Concept B

Testing Notes

Users found filters and bulk options helpful - they're used to reviewing/editing product data that way

Users weren’t sure how to interact with the page right away

Users spent a lot of time up front trying to strategize how they could filter the products into helpful groups

Users found images to be the most helpful when categorizing, but in a table the images are small

🎉 Concept A 🎉

Users were able to label the product's category in 4 seconds on average!

Since the primary action was prominently featured, users were able to focus on their goal and categorize products quickly and easily. Concept A streamlined this process, making it feel engaging and efficient. In contrast, Concept B, which included filtering and bulk action options (a common pattern in our application) caused users to spend excessive time upfront strategizing how to group their products. This approach also resulted in more mistakes when categorizing multiple products simultaneously.

One of our design goals was to make the process as enjoyable as possible, so we were thrilled when a user compared Concept A to playing a video game!

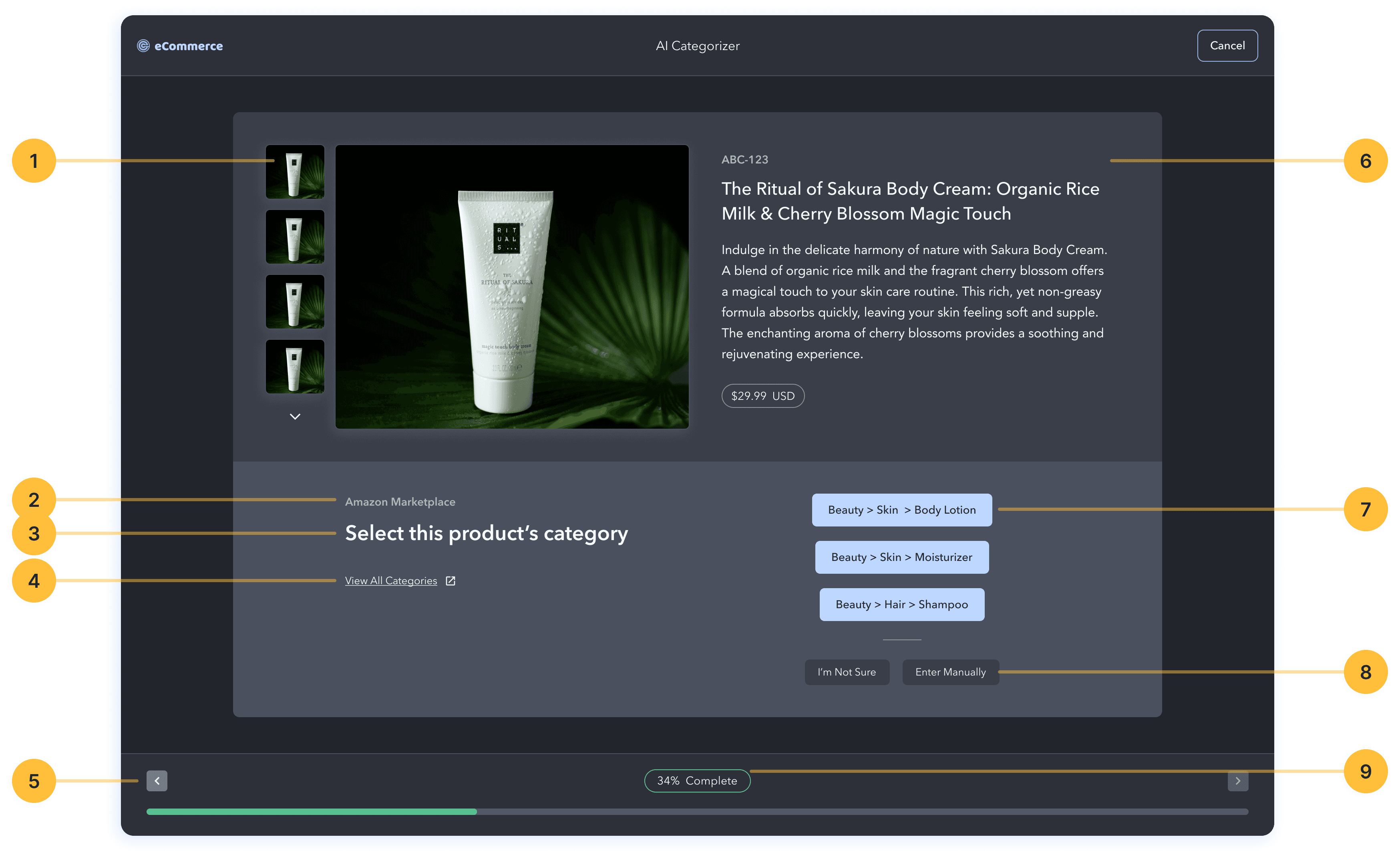

Added all product images since users stated that was most helpful

Added a callout for which marketplace the user is selecting categories for

Shortened the action statement

Added a quick link for the user to view all of the marketplace's categories if necessary

Added the ability for users to go backwards in case they made a mistake

Restructured the product data layout to make it look kind of like a product listing, and added the price data point

Stacked the category options so they're easier to compare

Added option for the user to enter a category if none of our predictions are correct

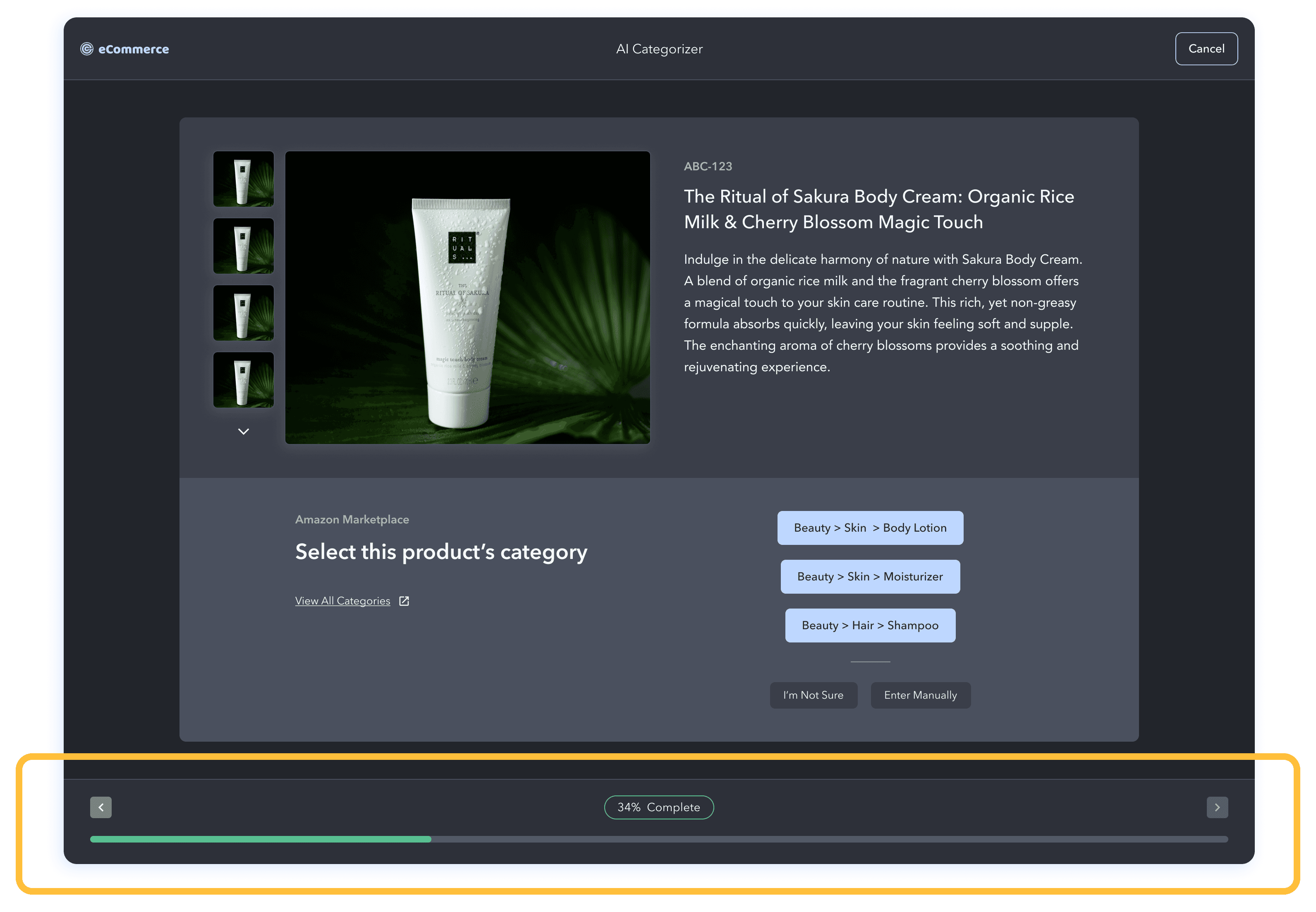

An extra progress callout so the user knows where they are in the process

We initially expected users to label products until the progress bar reached 100%, indicating that the machine learning model had gathered sufficient data to categorize the rest of the user’s product catalog. This approach would require real-time communication between the model and the interface to update the progress bar, but our engineers indicated that this isn't feasible at this stage.

This introduces several challenges, both technically and in terms of user experience. We need to address questions such as: When will the user know they have completed the labeling process? How do we effectively communicate progress to them?

Instead of the model learning in real time, users will label a pre-determined number of products based on a percentage of the products in the user's catalog. The machine learning model will do more work upfront (instead of in real time) to select products from diverse categories and that it's particularly uncertain about.

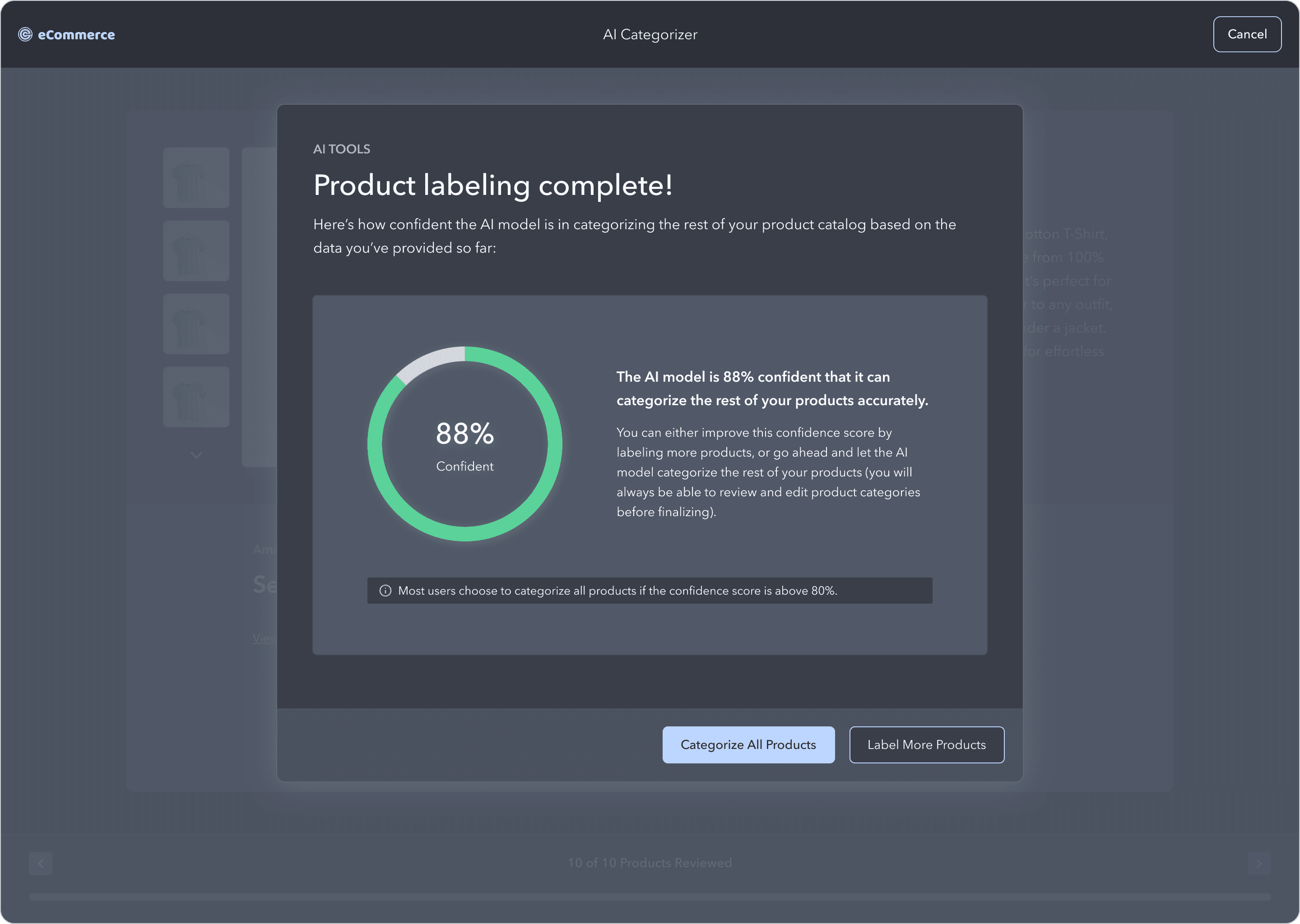

After completing the labeling of this set, the user will receive a confidence score indicating how confident the model is in labeling the remaining products in their catalog. The user can then choose whether to let the model proceed with labeling the rest of their products or to label additional products to improve the confidence score.

Design Changes

Instead of showing a percentage for progress, show the number of products to review. This keeps the gamification element that users liked in testing.

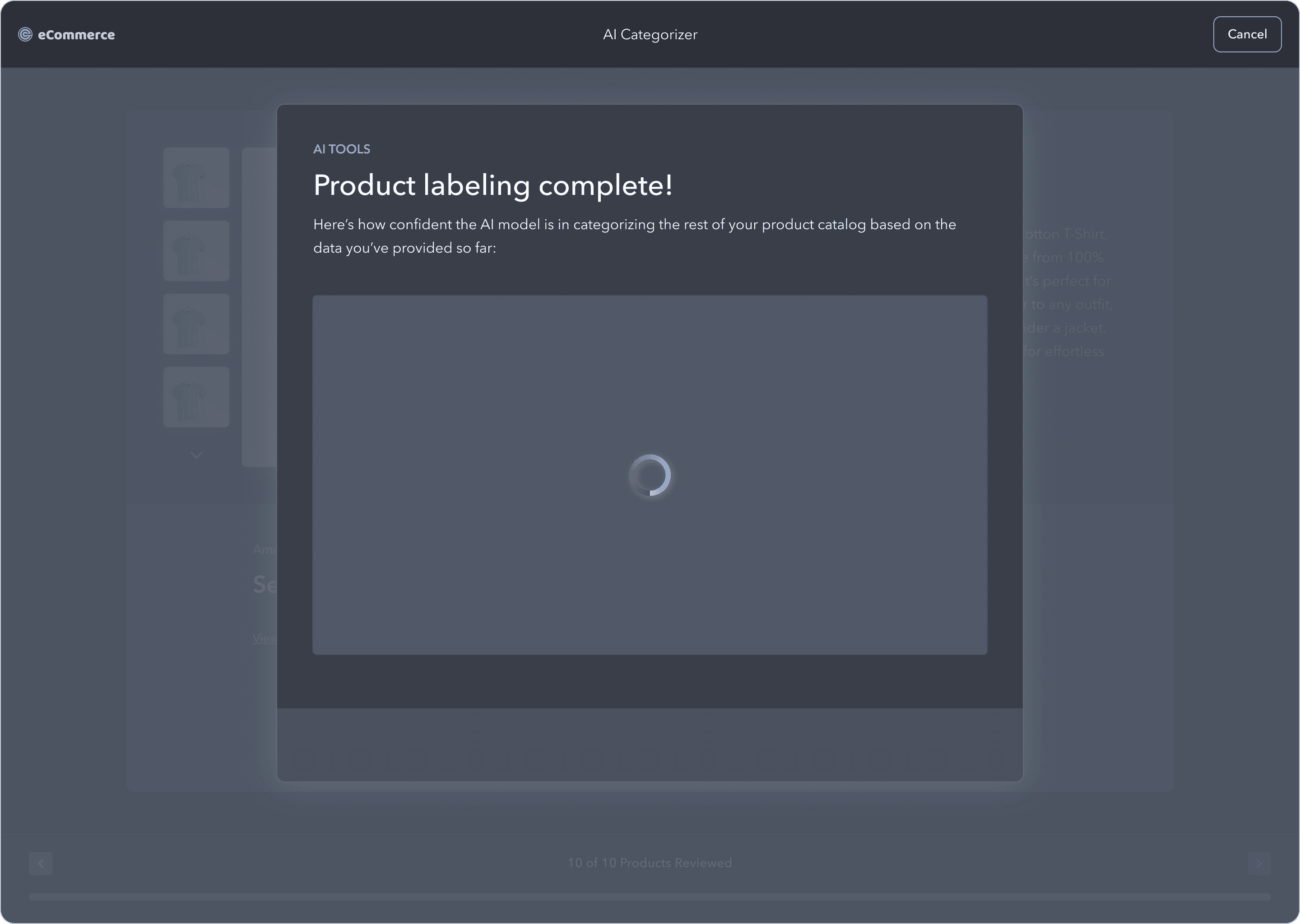

New screen - once the user is finished labeling a set of products, show a confidence score with options to either move forward or label more products

More loading states will be required while the model is generating the product set and the confidence score

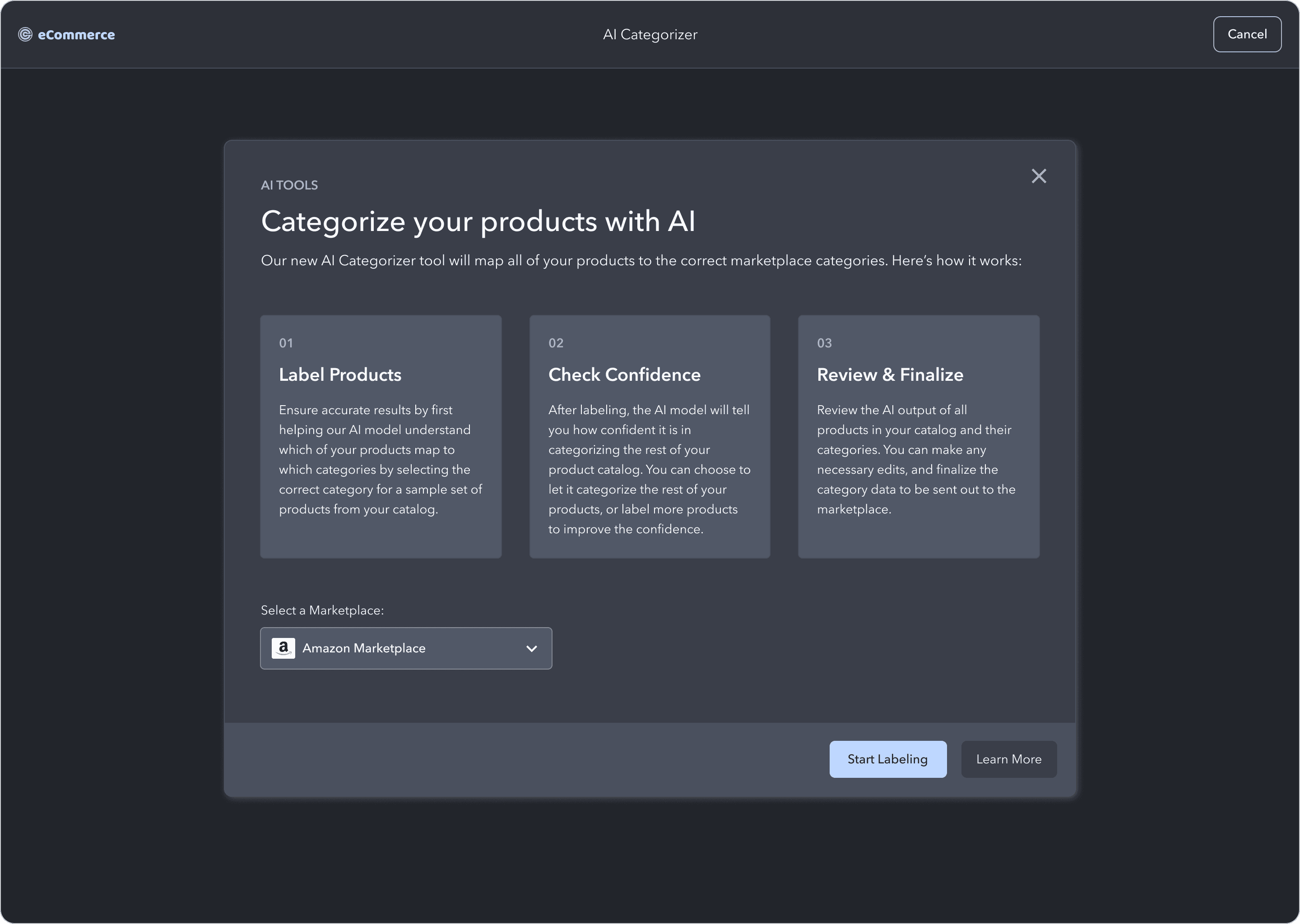

The Introduction

We added a screen to introduce the user to the concept of AI mapping and the overall process they're about to get into.

Users can enter this flow from a couple areas within the app, so we added a marketplace dropdown so the user can ensure they're mapping categories for the correct channel.

Human-in-the-Loop (HITL)

This is where users review each product and select the correct category to train the AI model. The product image is front and center, and there's more product data if the user needs it.

It took many iterations to get the transition timing right, and ensure the interactions were clear and smooth between each product.

Confidence Score

After the user labels their last product, there's a celebratory animation while the AI confidence score loads.

The user can decide to either move forward and allow the AI model to categorize the rest of their products, or to repeat the labeling process to increase the confidence before moving forward.

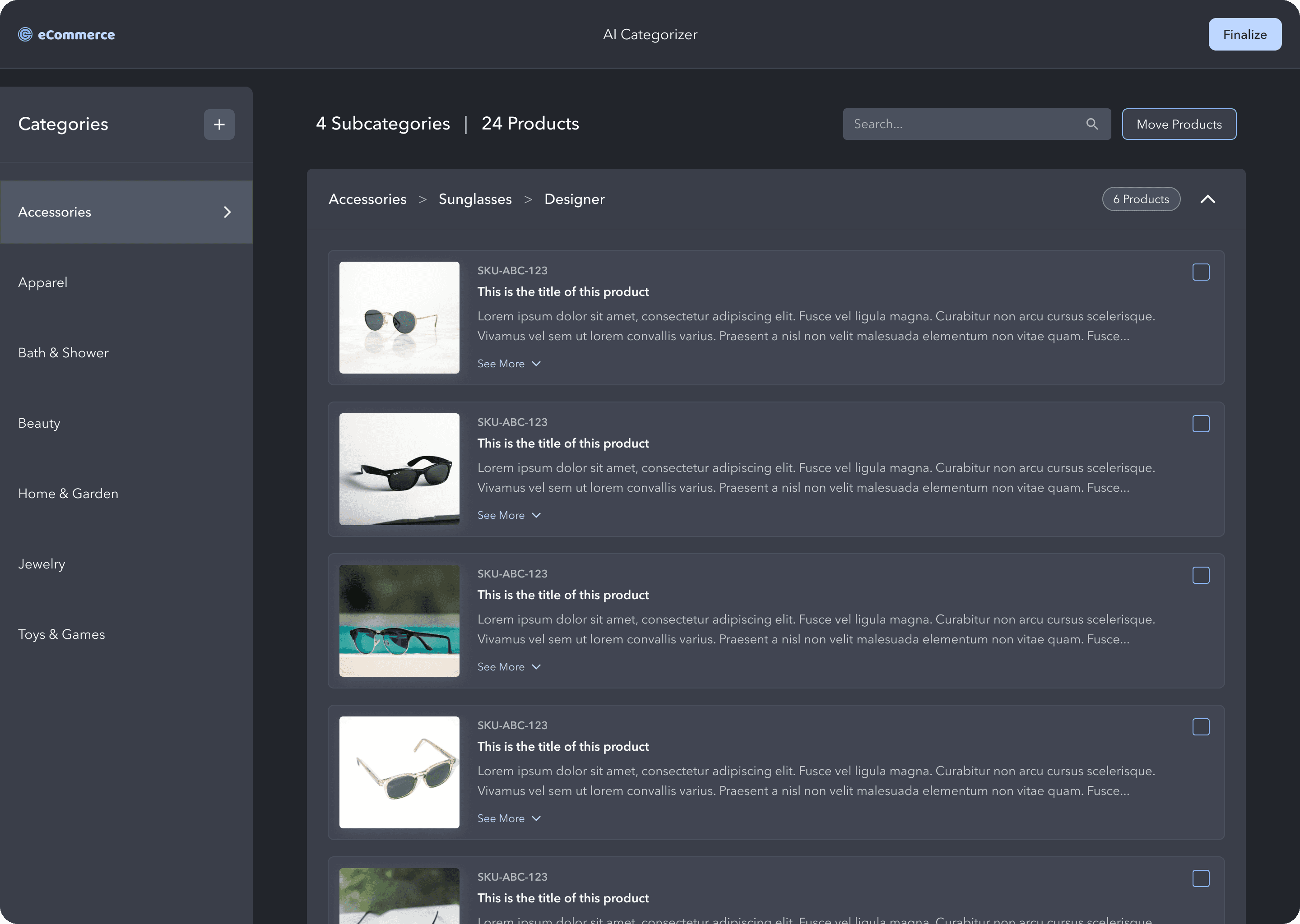

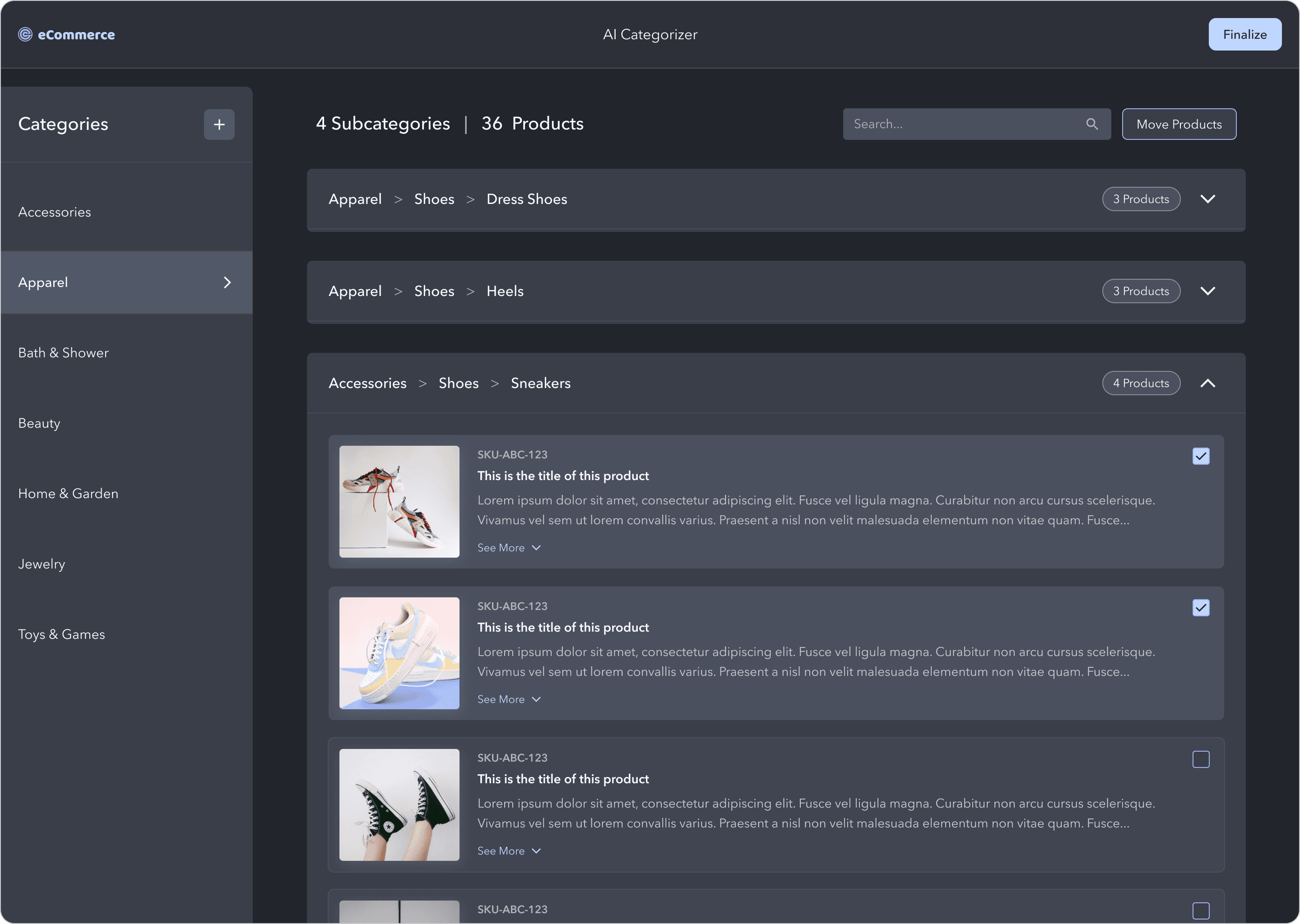

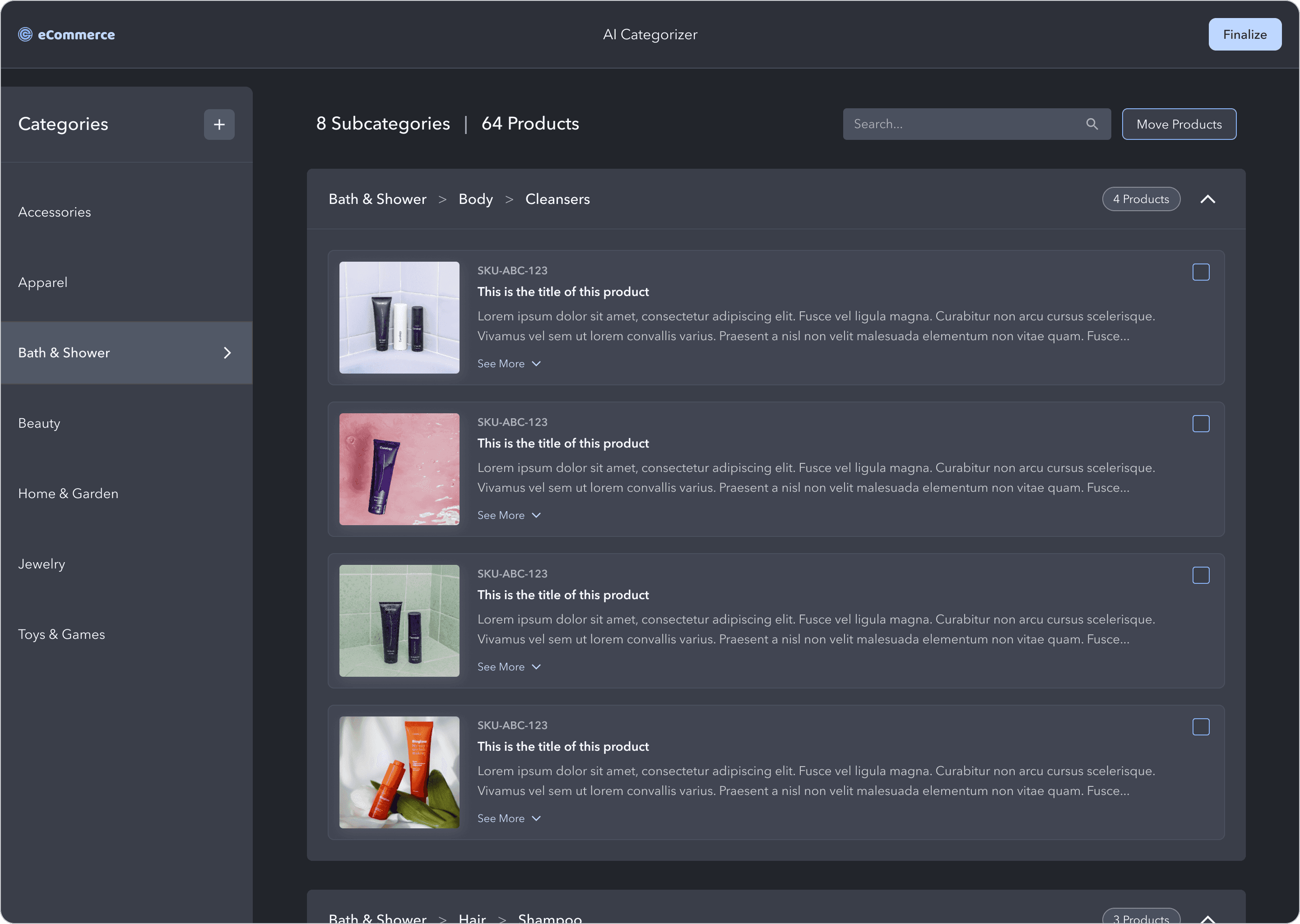

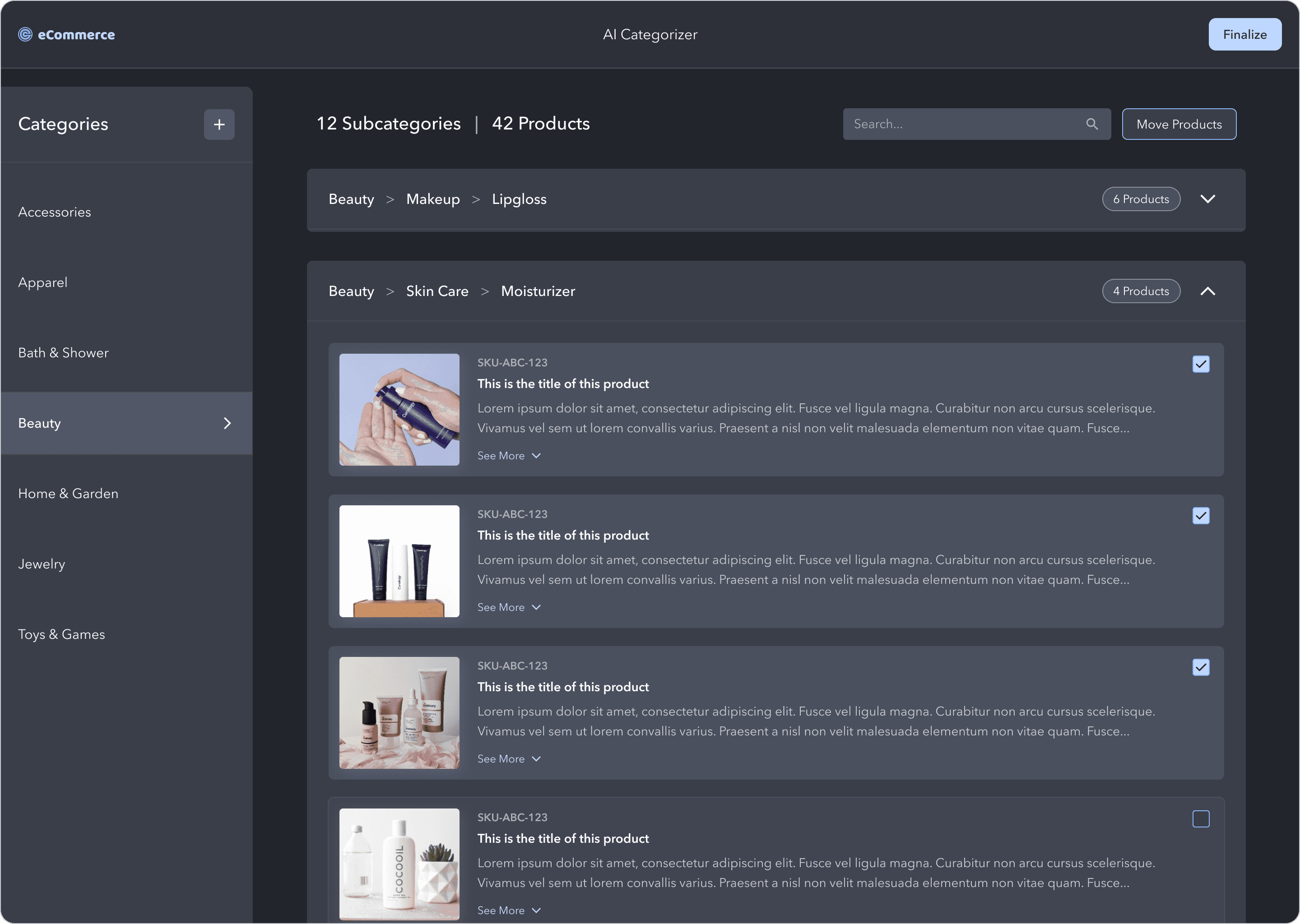

Review AI Results

Here, the user reviews how all of their products were categorized by the AI model. There's a top-level category panel on the left, and the subcategory sections on the right.

Users can select multiple products via the checkbox in the product cards to move them to a different category in bulk.

Once the user is happy with the product categories, they select Finalize in the top right to finish the process.

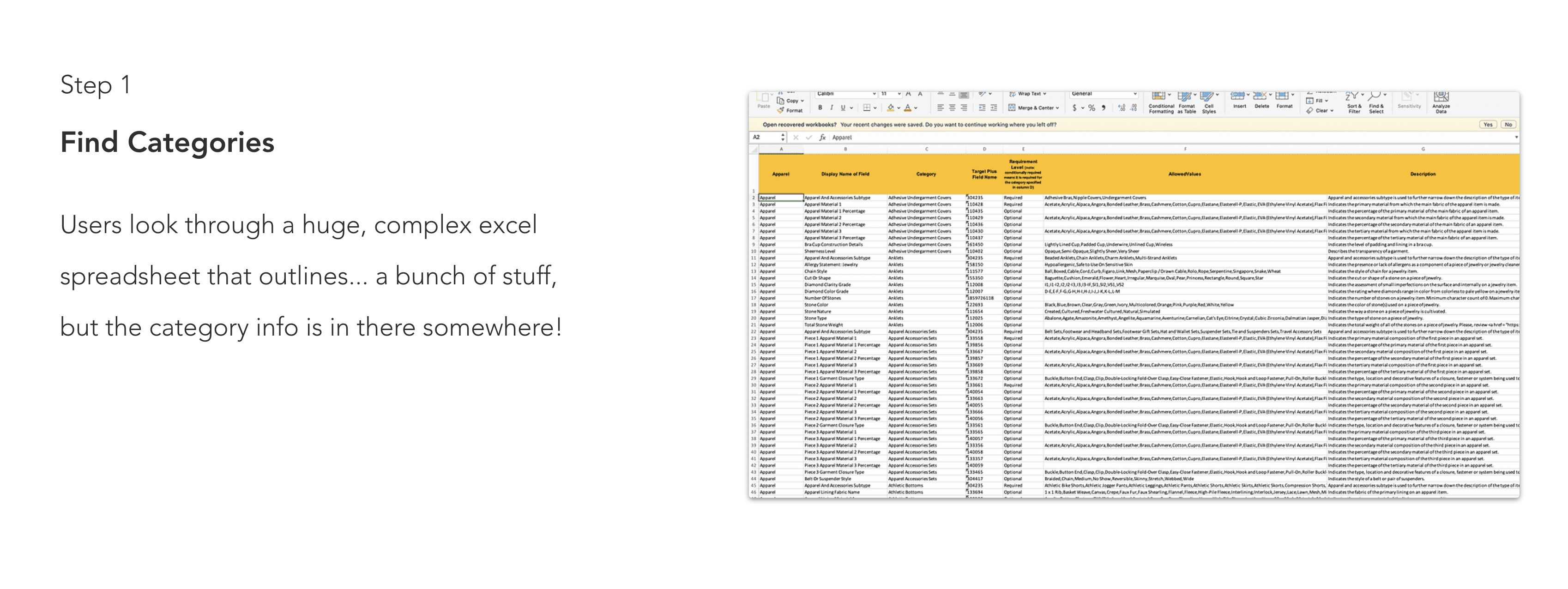

Greatly reduced user time and effort

BEFORE

Hours - Days

Category mapping manually using spreadsheets was a nightmare and took forever.

AFTER

Minutes!

With the AI "magic mapper" as users were calling it, they could categorize all of their products for a marketplace is a matter of minutes!

An AI innovation award!

The mvp version of this product has already won my company an award by Hermes for AI innovation!